What We Think We Know: How Overconfidence Derails Clinical Trials

In clinical research, where accuracy, coordination, and compliance are non-negotiable, the greatest threat isn’t always a lack of knowledge. Sometimes, it’s the mistaken belief that we already understand more than we do.

Volume 34, Issue 3: 06-01-2025

This cognitive bias, known as the illusion of knowledge, occurs when individuals overestimate their grasp of complex concepts or systems. In practice, it means investigators may feel confident in a trial protocol they’ve barely reviewed, or teams might assume timelines are realistic without accounting for common delays. The illusion is subtle but pervasive, and it quietly undermines decision-making, planning, and learning across all phases of a clinical trial.

Recognizing and mitigating this bias isn’t just an academic exercise—it’s essential to avoiding costly missteps and ensuring study success.

Drawing insights from recent Applied Clinical Trials articles, here are critical examples of how the illusion of knowledge manifests in clinical research, along with strategies to mitigate its impact:

1. Underestimating Training Needs During Trial Startup

Manifestation of the Illusion:

In our most recent article, The Compounding Power of Training, we highlighted a common misbelief: that traditional ‘check the box’ training suffices for trial success.

This overconfidence leads sponsors to underinvest in comprehensive training, assuming that site teams possess adequate knowledge from the outset. Such assumptions can often result in protocol deviations, recruitment challenges, and data inconsistencies.

Mitigation Strategy:

- Engage in Explanatory Thinking: Encourage site teams to articulate their understanding of protocols and procedures. This practice reveals knowledge gaps and fosters deeper comprehension.

- Foster Intellectual Humility: Cultivate a training and learning culture where acknowledging uncertainties is valued, prompting continuous learning and inquiry.

2. Overconfidence in Project Timelines Due to the Planning Fallacy

Manifestation of the Illusion:

In our article, Optimism’s Hidden Costs, we explored the “planning fallacy,” where teams underestimate the time and resources required for successful trial start-up activities. This overoptimism stems from an illusion of control and understanding, leading to unrealistic timelines and reactive crisis management when challenges arise.

Mitigation Strategy:

- Encourage Curiosity and Dialogue: Promote open discussions about potential obstacles and uncertainties during planning and feasibility phases. This approach enables teams to develop more realistic timelines and contingency plans.

- Foster Intellectual Humility: Recognize and accept the inherent uncertainties in clinical trials, allowing for more adaptable and resilient planning.

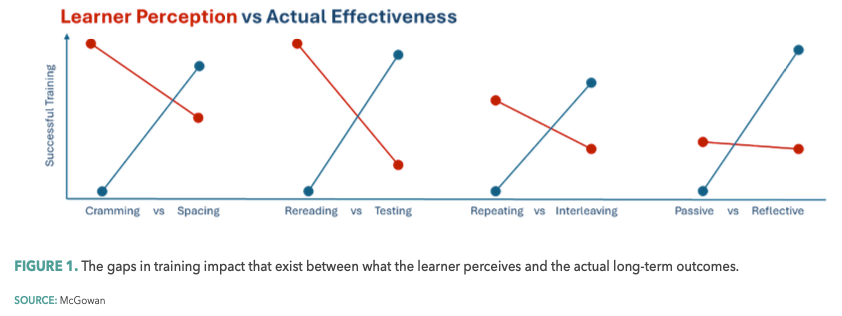

3. Preference for Passive Learning Over Effective Training Methods

Manifestation of the Illusion:

In our article, Rethinking Training, we emphasized that clinical trial staff often favor passive learning methods, believing them to be effective. This preference is yet another manifestation of the illusion of knowledge, where ease of learning is mistaken for actual understanding, leading to poor retention and application of critical information.

Mitigation Strategy:

- Engage in Explanatory Thinking: Implement training that requires active participation, such as problem-solving exercises, compelling learners to process and apply information.

- Foster Intellectual Humility: Educate planners and learners about the benefits of “desirable difficulties”—challenging learning experiences that enhance retention—to shift preferences toward more effective training methods.

4. Overconfidence in Training Completion

Manifestation of the Illusion:

In our article, Changing Behavior: Knowing Doesn’t Equal Doing, trial leaders often assume that once training is delivered, comprehension and performance will naturally follow. This illusion persists even when there’s little to no evidence that study teams truly understand the protocol or can apply it correctly under real-world conditions. Without measuring actual learning, sponsors are flying blind—confusing training completion with trial readiness.

Mitigation Strategy:

- Measure What Matters: Completion doesn’t equal comprehension. Use behavioral measures to reveal understanding, surface confusion, and flag underperformance. Track how well learners retain and apply protocol-critical concepts—not just whether they finished the training. The more precisely you measure, the better your decisions.

- Foster Intellectual Humility: Recognize that even experienced teams can misunderstand or misapply complex protocols. Make it standard practice to question assumptions, invite clarification, and validate understanding with real data. When you build systems that prioritize insight over assumption, readiness becomes measurable and actionable.

Ultimately, each of the strategies used to mitigate the illusion of knowledge—whether fostering intellectual humility, encouraging explanatory thinking, or measuring what matters—relies on one foundational commitment: investing in meaningful, evidence-informed training. Not training as a checkbox, but as a deliberate, ongoing process that surfaces false confidence, strengthens true understanding, and prepares teams for the complexity of real-world trials.

When organizations prioritize training that challenges assumptions, encourages curiosity, and builds cognitive resilience, they do more than educate and check the compliance box—they avoid costly delays and increase trial quality. Recognizing and addressing the illusion of knowledge isn’t just good practice; it’s a critical safeguard for trial success.

Brian S. McGowan, PhD, FACEHP, is Chief Learning Officer and Co-Founder, ArcheMedX, Inc.